Whether you need advanced technical help or are just starting your journey in animation, follow the links below to join the conversation in the Toon Boom community!

1 of 0

Connect with our world-wide community of artists and animators!

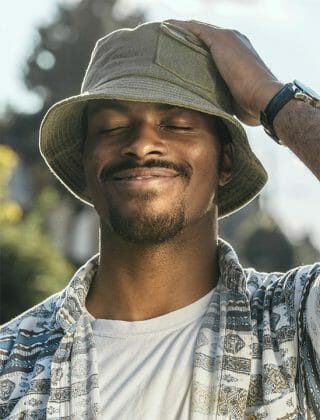

Nwakudu

Penzo

Manktala

Toon Boom Ambassadors

Each year we select 50 ambassadors who advance the craft of animation using Toon Boom Harmony or Storyboard Pro. This program is intended for artists who make exceptional use of our software — whether they are professional animators, freelancers, educators or hobbyists.

MVP Program

The MVP Program is our way of recognizing knowledgeable users who share helpful information with our worldwide community of users.

Connect with our global community

Whether you need advanced technical help or are just starting your journey in animation, follow the links below to join the conversation in the Toon Boom community!

Want to join the Toon Boom team?

Do you have talent and the desire to work in a fun and rewarding environment? Then Toon Boom has a place for you!

Select your country and language

See country-specific product information and pricing by selecting your country on this page